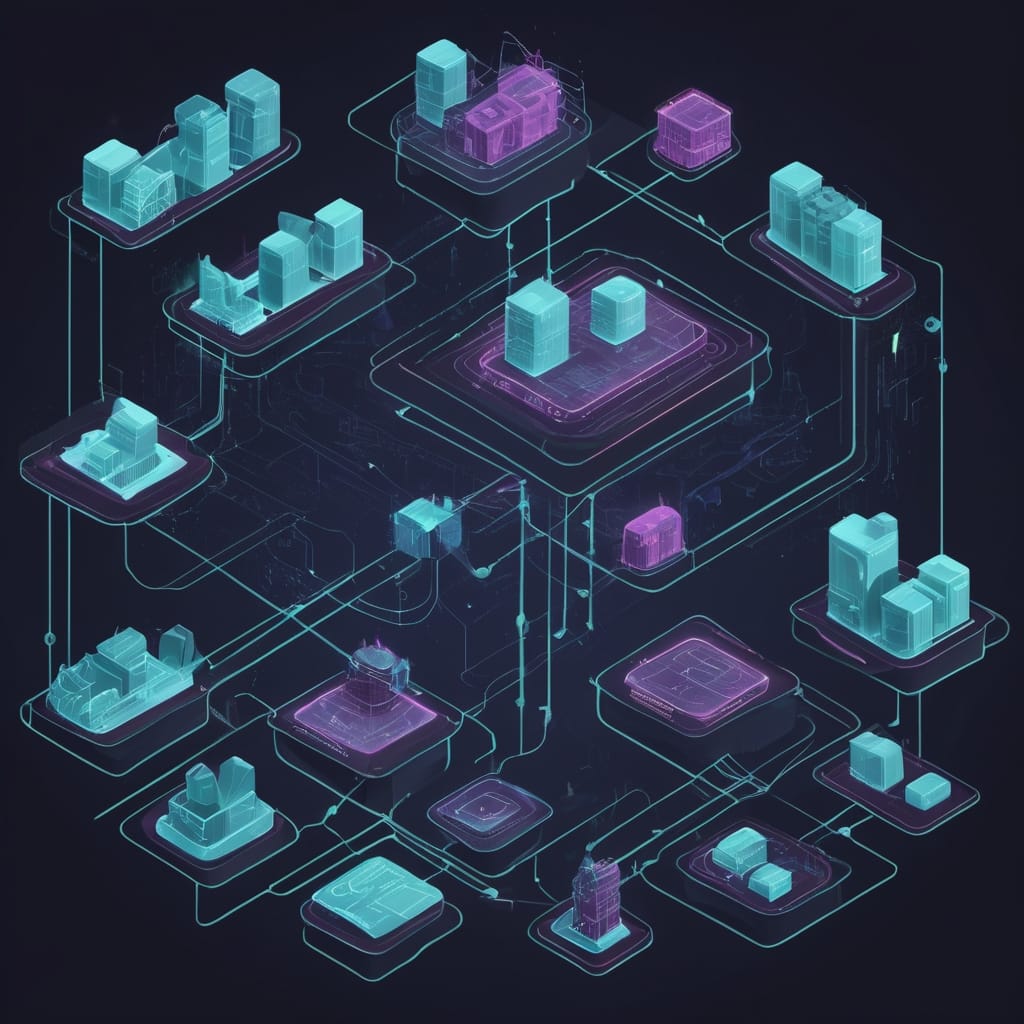

When I set out to build my own Elastic Stack environment, my primary goal was to use Logstash as a flexible data pipeline — pulling data from CouchDB and pushing it into Elasticsearch for fast, scalable search and analysis.

By leveraging Logstash, I gained the ability to connect to various data sources (including CouchDB), apply custom filtering and transformation logic, and seamlessly route structured output to Elasticsearch. The result? Clean, well-organized data ready for indexing, even at scale — all without writing custom ETL scripts.

Let’s briefly explore the key technologies that make this stack so powerful:

CouchDB

CouchDB is an open-source NoSQL database known for its flexibility, high availability, and distributed architecture. It stores data as JSON documents and exposes a RESTful HTTP API. Its document-oriented structure and multi-version concurrency control (MVCC) make it ideal for applications that manage large volumes of semi-structured or unstructured data.

Elasticsearch

Elasticsearch is a distributed search and analytics engine built on top of Apache Lucene. It’s optimized for lightning-fast queries across structured and unstructured data. From full-text search to real-time event monitoring, Elasticsearch is the engine that powers some of the most demanding data platforms in the world.

Kibana

Kibana is the visualization layer of the Elastic Stack. It allows you to explore and analyze your Elasticsearch data through rich dashboards, graphs, and visual tools. Whether you’re building monitoring dashboards, analyzing logs, or exploring ad hoc queries, Kibana provides an intuitive and powerful user interface for interacting with your data in real time.

Logstash

Logstash is the data processing powerhouse of the Elastic Stack. It collects, transforms, and routes logs or event data into Elasticsearch. With its rich set of plugins and pipeline configuration syntax, Logstash allows you to **enrich, normalize, and clean data on the fly** before indexing.

The Elastic Stack

Together, Elasticsearch, Logstash, and Kibana form what’s commonly referred to as the ELK Stack — now officially called the Elastic Stack. When deployed with Docker, this trio becomes incredibly easy to spin up, configure, and scale.

To get started, I looked for a practical way to spin up the entire Elastic Stack using Docker. That’s when I found a fantastic guide from Elastic themselves: “Getting started with the Elastic Stack and Docker Compose: Part 1” It provided a great foundation and inspired the custom setup I’m about to share with you.

Ready to Build?

Before we dive into containers, make sure you have Docker installed and working properly on your machine. We’ll be orchestrating everything using docker-compose, and to keep things flexible and clean, we’ll start by defining environment variables.

Creating a .env file

The first step is to create a .env file. This file will hold environment variables used by docker-compose.yml, helping us keep configuration centralized and editable.

COMPOSE_PROJECT_NAME=acmattos_es_stack

CLUSTER_NAME=acmattos-docker-cluster

COUCHDB_VERSION=3.5.0

STACK_VERSION=9.0.1

COUCHDB_USER=changeme

COUCHDB_PASSWORD=changeme

ELASTIC_PASSWORD=changeme

KIBANA_PASSWORD=changeme

COUCHDB_PORT=5984

COUCHDB_SPORT=6984

ES_PORT=9200

KIBANA_PORT=5602

ES_MEM_LIMIT=1073741824

KB_MEM_LIMIT=1073741824

LS_MEM_LIMIT=1073741824

These variables will allow us to quickly update versions, ports, and credentials without modifying the compose file directly — a best practice for both local and production environments.

Creating Docker Volumes

Before defining the services, let’s declare the Docker volumes that will persist important data across restarts.

volumes:

certs_volume:

driver: local

es_volume_01:

driver: local

kibana_volume:

driver: local

logstash_volume_01:

driver: local

couchdb_certs_volume:

driver: local

couchdb_volume_data:

driver: local

couchdb_volume_config:

driver: local

couchdb_volume_log:

driver: local

Volumes in Docker are used to store data independently from container lifecycles. This is crucial for services like Elasticsearch, Logstash, and CouchDB, which need to retain indexes, logs, and documents even if the container is rebuilt or removed.

Each volume here is:

- Named: making it easier to reference in the compose file and reuse.

- Using the

localdriver: which stores data on the host machine under/var/lib/docker/volumes/.

This setup gives us a persistent and portable development environment — essential when working with real-world data.

What Do These Volumes Represent?

Each of these volumes is used to persist specific types of data for the services in our Elastic Stack. Here’s a breakdown of their purpose based on common use cases:

certs_volume

Used to store SSL/TLS certificates that secure communication between services like Elasticsearch, Kibana, and Logstash.es_volume_01

Stores Elasticsearch data, including indexes and internal metadata required for search operations.kibana_volume

Used by Kibana to persist configuration files, logs, or session data across container restarts.logstash_volume_01

Holds data relevant to Logstash, such as pipeline definitions, temporary files, or processed logs.couchdb_certs_volume

Stores certificates specific to CouchDB, used to enable HTTPS.couchdb_volume_data

Contains CouchDB database files and documents — the core data storage for your NoSQL documents.couchdb_volume_config

Stores configuration files for CouchDB, including settings related to replication, admin credentials, and network bindings.couchdb_volume_log

Captures logs generated by CouchDB, useful for monitoring and debugging database activity.

By separating data into specific named volumes, we ensure data persistence, modularity, and easier backups or upgrades of individual services.

Docker Network Configuration

In our docker-compose.yml, we define a custom network to allow all containers in the stack to communicate internally. Here’s the declaration:

networks:

default:

name: elastic_network

external: false

This snippet does the following:

Defines a custom network section in the compose file and overrides the default network behavior used by Docker Compose. All services in this stack will be connected to this named network.

name: elastic_network

Assigns a clear and meaningful name to the network. This is especially useful for troubleshooting or for connecting external containers manually later on.external: false

Ensures that Docker Compose creates the network automatically if it doesn’t already exist, rather than expecting it to be pre-created.

By explicitly naming the network, we improve clarity and ensure consistent container communication across services like Elasticsearch, Kibana, Logstash, and CouchDB — all without relying on Docker’s autogenerated network names.

Defining the setup Service

The first service we define is setup. It’s a one-time initialization container responsible for:

- Generating the Certificate Authority (CA) and node certificates for CouchDB, Elasticsearch and Kibana

- Setting appropriate file permissions for certificate usage

- Setting the

kibana_systempassword using the Elasticsearch API

Here’s how it looks in the docker-compose.yml:

services:

setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs_volume:/usr/share/elasticsearch/config/certs

- logstash_certs_volume:/usr/share/logstash/config/certs

- couchdb_certs_volume:/usr/share/couchdb/config/certs

user: "0"

command: >

bash -c '

if [ x${ELASTIC_PASSWORD} == x ]; then

echo "Set the ELASTIC_PASSWORD environment variable in the .env file";

exit 1;

elif [ x${KIBANA_PASSWORD} == x ]; then

echo "Set the KIBANA_PASSWORD environment variable in the .env file";

exit 1;

fi;

if [ ! -f config/certs/ca.zip ]; then

echo "Creating CA";

bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip;

unzip config/certs/ca.zip -d config/certs;

fi;

if [ ! -f config/certs/certs.zip ]; then

echo "Creating certs";

echo -ne \

"instances:\n"\

" - name: es01\n"\

" dns:\n"\

" - es01\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: kibana\n"\

" dns:\n"\

" - kibana\n"\

" - localhost\n"\

" - name: couchdb\n"\

" dns:\n"\

" - couchdb\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

> config/certs/instances.yml;

bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key;

unzip config/certs/certs.zip -d config/certs;

fi;

echo "Copying certs to CouchDB volume"

cp -r config/certs/* /usr/share/couchdb/config/certs/

echo "Copying certs to Logstash volume"

cp -r config/certs/* /usr/share/logstash/config/certs

echo "Setting file permissions"

chown -R root:root config/certs /usr/share/couchdb/config/certs;

find config/certs /usr/share/couchdb/config/certs -type d -exec chmod 750 {} \;;

find config/certs /usr/share/couchdb/config/certs -type f -exec chmod 640 {} \;;

chown -R 1000:1000 config/certs /usr/share/logstash/config/certs;

find config/certs /usr/share/logstash/config/certs -type d -exec chmod 750 {} \;;

find config/certs /usr/share/logstash/config/certs -type f -exec chmod 644 {} \;;

echo "Waiting for CouchDB availability";

until curl -s --cacert config/certs/ca/ca.crt https://couchdb:${COUCHDB_SPORT} | grep -q "\"couchdb\""; do sleep 10; done;echo "✔️ CouchDB is up!";

echo "Waiting for Elasticsearch availability";

until curl -s --cacert config/certs/ca/ca.crt https://es01:9200 | grep -q "missing authentication credentials"; do sleep 30; done;echo "✔️ Elasticsearch is up!";

echo "Setting kibana_system password";

until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es01:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;echo "✔️ Kibana is up!";

echo "All done!";

'

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/es01/es01.crt ]"]

interval: 1s

timeout: 5s

retries: 120

Highlights of This Configuration

- Checks for required environment variables

Validates that bothELASTIC_PASSWORDandKIBANA_PASSWORDare set before proceeding. - Creates a Certificate Authority (CA)

Generates a root CA if it doesn’t already exist — promoting reproducibility and eliminating manual steps. - Issues PEM-formatted certificates for services

Signed certificates are created for:es01(Elasticsearch)kibanacouchdb

- Copies all certificates into the CouchDB volume

Ensures CouchDB receives its own keys and CA certificate, enabling encrypted HTTPS access. - Applies strict permissions

Enforces proper ownership and permissions on certificate directories:- Directories:

chmod 750 - Files:

chmod 640

- Directories:

- Waits for service readiness

Usescurlwith--cacertto poll:https://couchdb:${COUCHDB_SPORT}(for"couchdb"presence)https://es01:9200(for"missing authentication credentials")

- Sets

kibana_systempassword via Elasticsearch API

Ensures Kibana can securely authenticate using the preconfigured systemaccount. - Atomic and Idempotent

Steps are safely re-runnable — existing artifacts (e.g., certs) are detected and reused automatically. - Healthcheck ensures setup completion

The container only becomes healthy when the Elasticsearch certificate is present and valid.

This modular approach ensures that all cryptographic operations are isolated and reproducible, while guaranteeing that CouchDB and Elasticsearch are ready and secure before the rest of the stack proceeds.

Defining the es01 Service (Elasticsearch)

This is the main Elasticsearch node in the stack. It runs as a single-node cluster, with TLS and authentication enabled. The service only starts after the setup container has successfully completed, thanks to the depends_on condition.

es01:

depends_on:

setup:

condition: service_healthy

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

labels:

co.elastic.logs/module: elasticsearch

volumes:

- certs_volume:/usr/share/elasticsearch/config/certs

- es_volume_01:/usr/share/elasticsearch/data

ports:

- ${ES_PORT}:9200

environment:

- node.name=es01

- cluster.name=${CLUSTER_NAME}

- discovery.type=single-node

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es01/es01.key

- xpack.security.http.ssl.certificate=certs/es01/es01.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es01/es01.key

- xpack.security.transport.ssl.certificate=certs/es01/es01.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${ES_MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

Highlights of this configuration:

- Uses TLS for both HTTP and internal transport communication

- Reads passwords, cluster name, and license type from environment variables

- Mounted certificates and persistent storage via volumes

- Waits for the

setupservice to finish before starting - Includes a robust healthcheck to ensure Elasticsearch is ready to serve secure requests

This setup ensures that Elasticsearch starts securely, only after all the necessary certificates and passwords are in place — providing a strong foundation for the rest of the stack.

Defining the kibana Service

The kibana container provides the user interface for interacting with Elasticsearch. It connects securely to the Elasticsearch instance using TLS, and waits for es01 to be healthy before starting.

kibana:

depends_on:

es01:

condition: service_healthy

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

labels:

co.elastic.logs/module: kibana

volumes:

- certs_volume:/usr/share/kibana/config/certs

- kibana_volume:/usr/share/kibana/data

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVERNAME=kibana

- ELASTICSEARCH_HOSTS=https://es01:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

- XPACK_SECURITY_ENCRYPTIONKEY=${ENCRYPTION_KEY}

- XPACK_ENCRYPTEDSAVEDOBJECTS_ENCRYPTIONKEY=${ENCRYPTION_KEY}

- XPACK_REPORTING_ENCRYPTIONKEY=${ENCRYPTION_KEY}

mem_limit: ${KB_MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

Highlights of this configuration:

- Connects to Elasticsearch over HTTPS using a trusted CA certificate

- Waits for

es01to be healthy before launching - Uses the

kibana_systemaccount configured by thesetupservice - Stores Kibana data and TLS certs in mounted volumes

- Includes three encryption keys to enable secure sessions, saved objects, and reporting

- Has a reliable healthcheck based on the HTTP redirect that occurs on first load

This configuration ensures that Kibana is securely connected, stable, and ready to serve as the visual hub for your entire Elastic Stack.

Defining the logstash01 Service

The logstash01 container is responsible for processing and forwarding data into Elasticsearch. It runs a pre-defined pipeline and uses mounted volumes for configuration files, templates, and data ingestion.

logstash01:

depends_on:

es01:

condition: service_healthy

kibana:

condition: service_healthy

couchdb:

condition: service_healthy

image: docker.elastic.co/logstash/logstash:${STACK_VERSION}

labels:

co.elastic.logs/module: logstash

user: root

volumes:

- logstash_certs_volume:/usr/share/logstash/certs:ro

- logstash_volume_01:/usr/share/logstash/data

environment:

- xpack.monitoring.enabled=false

- ELASTIC_USER=elastic

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- ELASTIC_HOSTS=https://es01:9200

- COUCHDB_USER=${COUCHDB_USER}

- COUCHDB_PASSWORD=${COUCHDB_PASSWORD}

Highlights of this configuration:

- Waits for both Elasticsearch (

es01) and Kibana to be healthy before starting - Uses mounted volumes for:

- TLS certificates

- Pipeline definition (

logstash.conf) - Ingest templates for indexing documents

- Input data for testing or seeding

- Connects securely to Elasticsearch via HTTPS, using

ELASTIC_HOSTS - Disables X-Pack monitoring for a lighter footprint in development

- Runs as root to ensure file access (can be adjusted if needed)

This setup ensures that Logstash is fully wired into the stack with its own custom pipeline, ready to ingest and transform data for indexing in Elasticsearch.

Defining the couchdb Service

The couchdb container provides a secure, document-oriented NoSQL store for your stack. It uses TLS certificates generated by the setup container to enable HTTPS communication and includes a healthcheck that ensures it’s running and ready.

couchdb:

depends_on:

setup:

condition: service_healthy

image: couchdb:${COUCHDB_VERSION}

restart: always

ports:

- ${COUCHDB_PORT}:5984

- ${COUCHDB_SPORT}:6984

volumes:

- couchdb_certs_volume:/opt/couchdb/certs

- couchdb_volume_data:/opt/couchdb/data

- couchdb_volume_config:/opt/couchdb/etc/local.d

- couchdb_volume_log:/opt/couchdb/var/log

- ./couchdb-ssl.ini:/opt/couchdb/etc/local.d/couchdb-ssl.ini:ro

environment:

COUCHDB_USER: ${COUCHDB_USER}

COUCHDB_PASSWORD: ${COUCHDB_PASSWORD}

COUCHDB_COOKIE: couchdb_cluster_cookie

ERL_FLAGS: "-ssl_dist_opt server_certfile '/opt/couchdb/certs/couchdb/couchdb.crt' \

-ssl_dist_opt server_keyfile '/opt/couchdb/certs/couchdb/couchdb.key' \

-ssl_dist_opt server_cacertfile '/opt/couchdb/certs/ca/ca.crt'"

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert /opt/couchdb/certs/ca/ca.crt https://localhost:6984/_up | grep -q '\"status\":\"ok\"'"

]

interval: 10s

timeout: 5s

retries: 10

Highlights of this configuration:

- Waits for the

setupcontainer to finish creating certificates before starting - Enables HTTPS using custom CA-signed certificates stored in

couchdb_certs_volume - Uses named volumes to persist data, configuration, and logs

- Sets up authentication and clustering cookie via environment variables

- Performs a healthcheck over HTTPS using CouchDB’s native

/_upendpoint, with certificate validation

This configuration ensures that CouchDB is integrated securely into the Elastic Stack, with encrypted communication and startup only after all dependencies are in place.

The couchdb-ssl.ini File

To enable HTTPS inside CouchDB, we mount the following .ini configuration into the container:

[daemons]

httpsd = {chttpd, start_link, [https]}

[ssl]

port = 6984

enable = true

cert_file = /opt/couchdb/certs/couchdb/couchdb.crt

key_file = /opt/couchdb/certs/couchdb/couchdb.key

This file enables the httpsd daemon and binds it to port 6984, pointing it to the certificate and key files previously generated by the setup container and mounted via volume.

Common .ini Mounting Pitfalls on Windows

Mounting configuration files into CouchDB on Windows can lead to issues if the .ini file isn’t formatted correctly:

- Incorrect line endings: Windows uses CRLF, but CouchDB expects LF (Unix-style).

- Encoding with BOM: Saving the file as UTF-8 with BOM may cause CouchDB to fail silently.

- Bad relative paths: If using Git Bash or WSL,

./couchdb-ssl.inimight not resolve correctly in the container context.

To avoid these problems:

- Ensure your editor is saving with LF line endings (not CRLF)

- Use UTF-8 without BOM

- Confirm the path matches where the

.inifile lives relative to your Docker context - On Unix-like systems, run:

chmod 644 ./couchdb-ssl.ini - On Windows, set read permissions using:

icacls .\couchdb-ssl.ini /grant *S-1-1-0:R

Starting the Elastic Stack

With everything configured, it’s time to launch the stack.

Run the following command from the directory containing your docker-compose.yml:

docker-compose --env-file .env up -d

This command will:

- Build and start all containers in detached mode (

-d) - Automatically apply all environment variables from

.env - Create volumes and the network if they don’t exist yet

Validating the Environment

You can check if everything is running smoothly using:

docker-compose ps

Each service should show a healthy or Up status. If you see starting or unhealthy, check the logs:

docker-compose logs -f <service_name>

Accessing the Services

Once all containers are healthy, you can access:

- Kibana: http://localhost:${KIBANA_PORT}

Useelasticand${ELASTIC_PASSWORD}to log in. - CouchDB: https://localhost:${COUCHDB_PORT}

Use${COUCHDB_USER}and${COUCHDB_PASSWORD}.

You may need to accept the self-signed certificate in your browser. - Elasticsearch: Accessible programmatically at

https://localhost:${ES_PORT}using basic auth withelastic:${ELASTIC_PASSWORD}.

To test Elasticsearch directly:

curl -u elastic:${ELASTIC_PASSWORD} --cacert ./certs/ca/ca.crt https://localhost:${ES_PORT}

You should receive a JSON response with cluster information.

You’re Ready to Build!

At this point, your secure Elastic Stack with CouchDB integration is fully operational. You can now:

- Start designing Logstash pipelines

- Ingest and index data into Elasticsearch

- Build interactive Kibana dashboards

- Explore CouchDB contents via its REST API

Useful Resources

Helpful CLI Commands

# View logs for a specific service

docker-compose logs -f <service_name>

# Restart a specific container

docker-compose restart <service_name>

# Stop the stack

docker-compose down

# Rebuild from scratch

docker-compose down -v && docker-compose --env-file .env up --build -d

Conclusion

Part 1 is over. Congratulations! You’ve successfully built an Elastic Stack Dockered-based environment.

You can download a version of the code showcased in this article from my GitHub repository.

You are now ready to continue with Part 2, where we’ll design a complete pipeline using Logstash to extract data from CouchDB, transform it, and load it into Elasticsearch for analysis. Thank you for reading this article!

0 Comments